In Kaggle machine learning competitions, two techniques tend to be dominant: the use of groupings of decision trees for structured data and neural networks when the data includes images or sound. Traditionally, Random Forest dominated competitions in structured data, but another algorithm has recently surpassed it in these competitions: Gradient Boosted Trees.

Like RF, GBT classifies examples through the use of a grouping of decision trees. In the case of the latter, the trees are constructed sequentially, adding at each iteration the tree that best compensates for the errors in the other trees. This method is called gradient because the model evolves tree by tree towards a minimum of error.

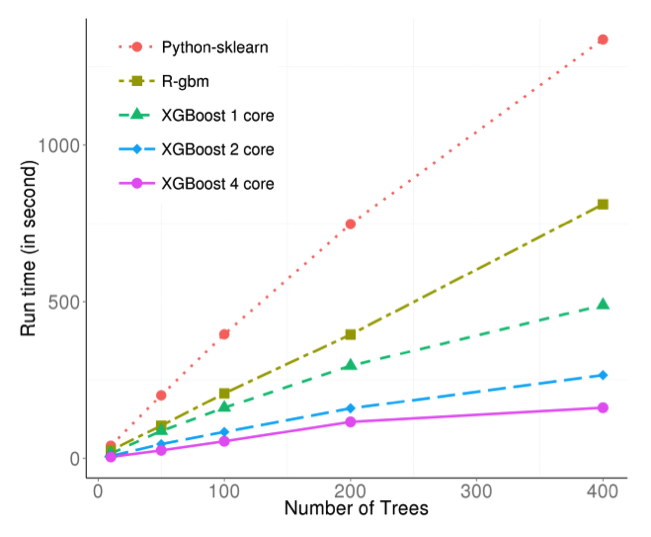

The tool used in these cases is called XGBoost, an implementation of GBT that is compatible with Python and R. Its success in competitions is due not only to its precision but also to the speed of iteration that it provides. While still functioning as a single core, it works twice as fast as the gbm library of R and four times as fast as the scikit-learn implementation in Python. This is achieved through a series of optimizations in the implementation that can accelerate to even greater speeds thanks to its compatibility with distributed systems like MPI or Yam.